June 28, 2025

•By Anix AI Team

•AI Trust

Building Trust in AI: Why Transparency and Explainability Matter

As AI becomes central to enterprise decision-making, trust is critical for its adoption. Transparency and explainability are the pillars that ensure AI systems are accountable, fair, and reliable. This blog explores why these principles are essential and how organizations can build trust in AI to drive responsible and effective outcomes.

The Trust Gap in AI

Many of today’s AI models operate as black boxes—delivering outputs without revealing how or why those decisions were made. While these models may achieve high accuracy, the lack of visibility into their internal logic raises red flags for stakeholders who demand accountability.

When users don’t understand how a decision was reached, they are less likely to accept it. When regulators can’t verify how outcomes are derived, compliance becomes a concern. And when developers can’t explain why models behave a certain way, correcting errors becomes difficult.

This trust gap slows adoption, increases risk, and undermines the value AI is meant to deliver.

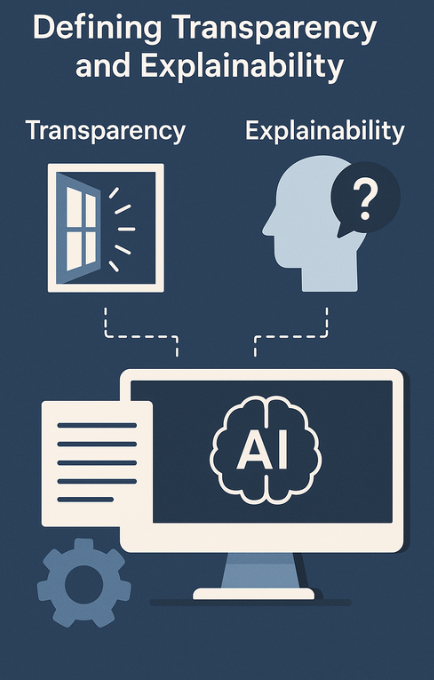

Defining Transparency and Explainability

Transparency in AI refers to the openness around how an AI system is built, trained, and deployed. It includes the clarity of data sources, the algorithms used, the purpose of the model, and the potential risks.

Explainability goes a step further. It’s the ability to clearly articulate why an AI system made a specific decision or prediction. This means translating complex statistical outputs into human-understandable terms—so that non-experts can evaluate, question, and trust the system.

While transparency is about seeing inside, explainability is about making sense of what’s inside.

Explainable AI Framework

Why They Matter More Than Ever

As AI systems become more embedded in core business operations, explainability is no longer optional—it’s essential. In regulated industries like finance, healthcare, and insurance, organizations are required to explain decisions that affect consumers. If a customer is denied a loan or a patient receives a diagnosis, the company must be able to justify the outcome. Failure to do so can result in legal consequences and reputational damage.

From an ethical standpoint, transparency and explainability are central to responsible AI. They ensure that biases can be identified, unfair outcomes challenged, and harmful consequences avoided. In a world where AI decisions can affect livelihoods, fairness and accountability are non-negotiable.

From a technical perspective, explainability also improves performance. When developers understand why a model is making poor predictions, they can debug and retrain more effectively. It also builds confidence among internal teams who must rely on AI for everyday decisions.

Building Explainable AI Systems

Designing AI systems for transparency and explainability starts at the foundation. It begins with clear documentation—of training data sources, model selection criteria, and the business goals being pursued. Bias detection and fairness testing should be embedded throughout development.

Model selection also plays a role. While deep learning models are powerful, they often lack interpretability. In contrast, decision trees, rule-based systems, and linear models may offer more explainability for certain use cases. Hybrid approaches, where complex models are supplemented by interpretable layers, are also gaining popularity.

Visualization tools and explanation frameworks—like SHAP, LIME, and counterfactual explanations—can help unpack model behavior in simple terms. These tools provide feature importance scores, alternative decision scenarios, and what-if analyses that users can understand.

Finally, organizations must establish governance structures for AI explainability. This includes setting policies, training teams, and conducting regular audits to ensure that AI systems remain transparent and accountable over time.

Balancing Performance with Interpretability

There is often a perceived trade-off between performance and explainability. More accurate models tend to be more complex, while simpler models are easier to interpret but may underperform.

The key is finding the right balance based on the use case. For high-stakes decisions—such as credit approval, medical diagnosis, or legal judgment—explainability should be prioritized. For low-risk tasks—like recommending content or sorting emails—complexity may be acceptable if monitored appropriately.

Enterprises must define thresholds for acceptable opacity and ensure that critical systems remain auditable and accountable. This balance is not static—it should evolve with the maturity of AI use and the expectations of stakeholders.

Creating a Culture of AI Trust

Trust in AI doesn’t come from technology alone—it comes from people. Organizations must foster a culture where transparency and explainability are core principles, not afterthoughts.

This means educating business users on how AI works, empowering them to question decisions, and making the system's logic visible wherever possible. It also means being proactive in engaging regulators, customers, and partners on how AI is being used and governed.

When transparency is built into AI from the start—and explainability is available at every decision point—trust follows naturally.

Conclusion

AI has the potential to become the most transformative force in modern business—but only if people trust it. Transparency and explainability are the keys to earning that trust.

As enterprises scale their AI initiatives, they must do so responsibly—ensuring that every model is not just powerful, but understandable, fair, and accountable. In this new era of intelligent systems, trust is the true currency, and explainable AI is how it’s earned.

The future of AI isn’t just intelligent—it’s transparent.